Thursday, November 12, 2020

Friday, October 30, 2020

List of blogs and sites

Following the line of my last post, here is a list of blogs and sites that are useful to know and consult:

List of talks

Inspired by my "list of books" posts, I decided to make a list of some of the most interesting talks that I've watched in the last few years. Here it is:

- Managing Unconscious Bias: excellent Facebook content designed to help us recognize our biases so we can reduce their negative effects in the workplace.

- Python Concurrency From the Ground Up: LIVE!: all live demo talk by David Beazley about threads, event loops and coroutines in Python. The most epic live session I've ever seen!

- The Many Meanings of Event-Driven Architecture: event-driven systems are not all equal. In this talk, Martin Fowler presents four patterns which tend to appear under the title of "event-driven" (event notification, event-based state transfer, event sourcing, and CQRS) as well as the architectural assumptions and implications of each one.

- Beyond PEP 8 -- Best Practices For Beautiful Intelligible Code: Raymond Hettinger is one of my favorite speakers. In this fantastic talk, he shows how looking at Python code beyond the PEP 8 rules can lead to a higher quality (and more Pythonic) code.

- 10 Things I Regret About Node.js: In this iconic presentation, the creator of Node.js, Ryan Dahl, comments on the technical decisions he currently considers flaws in Node.js and uses them as motivators to introduce the public to his new project: Deno.

- Applied Performance Theory: Kavya Joshi offers a great content on performance theory and its uses in real systems. Mandatory talk for those who want to carry out load tests properly.

- The History of Fire Escapes: Tanya Reilly looks at what can be learned from real world fire codes about expecting failure and designing for it. A deep reflection showing that investing in more resilient and "fireproof" software can be much more effective than just investing in response to incidents.

Saturday, September 26, 2020

List of books (3rd edition)

-

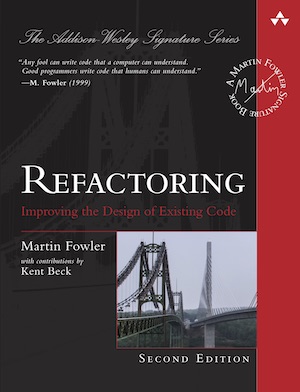

Refactoring: Improving the Design of Existing Code (2nd Edition)

Refactoring is one of those books that contains a lot of useful and practical wisdom. Martin Fowler presents the techniques of refactoring as a set of well-defined mechanics that can (and should) be applied to daily programming to improve the readability and maintainability of existing codebases. I had a good experience reading it from cover to cover. However, I think it would be equally good to read only the first few chapters in order, leaving the catalog to be read later as each refactoring is needed. -

The Manager's Path: A Guide for Tech Leaders Navigating Growth and Change

The Manager's Path guides you through a journey from learning how to be managed to the complexities of being a CTO. With an articulate writing style, Camille Fournier shares excellent words of wisdom and, as she focuses specifically on engineering management, delivers content that is much more valuable to technologists than generic management books. Even those software engineers who do not intend to pursue a management career will benefit greatly from reading the book, especially the early chapters on how to be managed, what to expect from a manager, mentoring and being a tech lead. -

Building Evolutionary Architectures: Support Constant Change

Rebecca Parsons and her colleagues do a great job bringing many concepts and ideas from evolutionary computing — and biological evolution in general — into the world of software architecture. They don't take a position of defending their approach as the best way (or the right way) to build software, but rather show what kind of considerations should be made if a software architect or engineering team wants to put architectural evolution among their priorities when developing systems. Highly recommended.

Composition and multiple inheritance

One of my lasts posts is a quote from Martin Fowler's Refactoring, in which he says that "inheritance has its downsides. Most obviously, it's a card that can only be played once. If I have more than one reason to vary something, I can only use inheritance for a single axis of variation.".

After some time, I started to wonder if this problem is not solved by multiple inheritance. Fortunately, this is an issue addressed by Steven Lowe's post Composition vs. Inheritance: How to Choose?, in which he argues that inheritance relationships should not cross domain boundaries (implementation domain vs. application domain). Thus, although inheritance can be used for mechanical and semantic needs, using it for both will tangle the two dimensions, confusing the taxonomy. In that sense, even when inheritance is a card that can be played multiple times, you should think carefully if it's not the case to actually play it only once.

Saturday, September 19, 2020

Replace superclass with delegate

Martin Fowler, "Replace Superclass with Delegate", in Refactoring: Improving the Design of Existing Code (2nd Edition), 399-400.In object-oriented programs, inheritance is a powerful and easily available way to reuse existing functionality. I inherit from some existing class, then override and add additional features. But subclassing can be done in a way that leads to confusion and complication.

One of the classic examples of mis-inheritance from the early days of objects was making a stack be a suclass of list. The idea that lead to this was reusing of list's data storage and operations to manipulate it. While it's good to reuse, this inheritance had a problem: All the operations of the list were present on the interface of the stack, although most of them were not applicable to a stack. A better approach is to make the list into a field of the stack and delegate the necessary operations to it.

This is an example of one reason to use Replace Superclass with Delegate — if functions of the superclass don't make sense on the subclass, that's a sign that I shouldn't be using inheritance to use the superclass's functionality.

As well as using all the functions of the superclass, it should also be true that every instance of the subclass is an instance of the superclass and a valid object in all cases where we're using the superclass. If I have a car model class, with things like name and engine size, I might think I could reuse these features to represent a physical car, adding functions for VIN number and manufacturing date. This is a common, a often subtle, modeling mistake which I've called the type-instance homonym.

These are both examples of problems leading to confusion and errors — which can be easily avoided by replacing inheritance with delegation to a separate object. Using delegation makes it clear that it is a separate thing — one where only some of the functions carry over.

Even in cases where the subclass is reasonable modeling, I use Replace Superclass with Delegate because the relationship between a sub- and superclass is highly coupled, with the subclass easily broken by changes in the superclass. The downside is that I need to write a forwarding function for any function that is the same in the host and in the delegate — but, fortunately, even though such forwarding functions are boring to write, they are too simple to get wrong.

As a consequence of all this, some people advise avoiding inheritance entirely — but I don't agree with that. Provided the appropriate semantic conditions apply (every method on the supertype applies to the subtype, every instance of the subtype is an instance of the supertype), inheritance is a simple and effective mechanism. I can easily apply Replace Superclass with Delegate should the situation change and inheritance is no longer the best option. So my advice is to (mostly) use inheritance first, and apply Replace Superclass with Delegate when (and if) it becomes a problem.

Replace subclass with delegate

Martin Fowler, "Replace Subclass with Delegate", in Refactoring: Improving the Design of Existing Code (2nd Edition), 381-398.If I have some objects whose behavior varies from category to category, the natural mechanism to express this is inheritance. I put all the common data and behavior in the superclass, and let each subclass add and override features as needed. Object-oriented languages make this simple to implement and thus a familiar mechanism.

But inheritance has its downsides. Most obviously, it's a card that can only be played once. If I have more than one reason to vary something, I can only use inheritance for a single axis of variation. So, if I want to vary behavior of people by their age category and by their income level, I can either have subclasses for youg and senior, or for well-off and poor — I can't have both.

A further problem is that inheritance introduces a very close relationship between classes. Any change I want to make to the parent can easily break children, so I have to be careful and understand how children derive from the superclass. This problem is made worse when the logic of the two classes resides in different modules and is looked after by different teams.

Delegation handles both of these problems. I can delegate to many different classes for different reasons. Delegation is a regular relationship between objects — so I can have a clear interface to work with, which is much less coupling than subclassing. It's therefore common to run into the problems with subclassing and apply Replace Subclass with Delegate.

There is a popular principle: "Favor object composition over class inheritance" (where composition is effectively the same as delegation). Many people take this to mean "inheritance considered harmful" and claim that we should never use inheritance. I use inheritance frequently, partly because I always know I can use Replace Subclass with Delegate should I need to change it later. Inheritance is a valuable mechanism that does the job most of the time without problems. So I reach for it first, and move onto delegation when it starts to rub badly. This usage is actually consistent with the principle — which comes from the Gang of Four book that explains how inheritance and composition work togheter. The principle was a reaction to the overuse of inheritance.

Those who are familiar with the Gang of Four book may find it helpful to think of this refactoring as replacing subclasses with the State or Strategy patterns. Both of these patterns are structurally the same, relying on the host delegating to a separate hierarchy. Not all cases of Replace Subclass with Delegate involve an inheritance hierarchy for the delegate, but setting up a hierarchy for states or strategies is often useful.

[...]This is one of those refactorings where I don't feel that refactoring alone improves the code. Inheritance handles this situation very well, whereas using delegation involves adding dispatch logic, two-way references, and thus extra complexity. The refactoring may still be worthwhile, since the advantage of a mutable premium status, or a need to use inheritance for other purposes, may outweigh the disadvantage of losing inheritance.

Tuesday, August 18, 2020

Trust the process

Paul Ford, in Trust the process.The only way to do more is to start by doing less.

[...]Good process doesn’t have a name because it doesn’t need one.

[...]If you want to measure how your team is working, measure how little they discuss their process.

Thursday, August 13, 2020

Just fix it

Martin Fowler, "Remove Middle Man", in Refactoring: Improving the Design of Existing Code (2nd Edition), 192.I can adjust my code as time goes on. [...] A good encapsulation six months ago may be awkward now. Refactoring means I never have to say I'm sorry — I just fix it.

Thursday, June 11, 2020

All models are wrong, but some are useful

George Edward Pelham Box, Alberto Luceño e Maria Del Carmen Paniagua-Quinones, "What Can Go Wrong and What Can We Do About It?" in Statistical Control by Monitoring and Adjustment (2nd Edition), 61.All models are approximations. Assumptions, whether implied or clearly stated, are never exactly true. All models are wrong, but some models are useful. So the question you need to ask is not "Is the model true?" (it never is) but "Is the model good enough for this particular application?"

Friday, May 15, 2020

Comments

Martin Fowler, "Bad Smells in Code", in Refactoring: Improving the Design of Existing Code (2nd Edition), 84.Don't worry, we aren't saying that people shouldn't write comments. In our olfactory analogy, comments aren't a bad smell; indeed they are a sweet smell. The reason we mention comments here is that comments are often used as a deodorant. It's surprising how often you look at thickly commented code and notice that the comments are there because the code is bad.

Comments leads us to bad code that has all the rotten whiffs we've discussed in the rest of this chapter. Our first action is to remove the bad smells by refactoring. When we've finished, we often find that the comments are superfluous.

If you need a comment to explain what a block of code does, try Extract Function. If the method is already extracted but you still need a comment to explaing what it does, use Change Function Declaration to rename it. If you need to state some rules about the required state of the system, use Introduce Assertion.

A good time to use a comment is when you don't know what to do. In addition to describing what is going on, comments indicate areas in which you aren't sure. A comment can also explain why you did something. This kind of information helps future modifiers, especially forgetful ones.

Refactoring and performance II

Martin Fowler, "Principles in Refactoring", in Refactoring: Improving the Design of Existing Code (2nd Edition), 64-67.A common concern with refactoring is the effect it has on the performance of a program. To make the software easier to understand, I often make changes that will cause the program to run slower. This is an important issue. I don't belong to the school of thought that ignores performance in favor of design purity or in hopes of faster hardware. Software has been rejected for beign too slow, and faster machines merely move the goalposts. Refactoring can certainly make software go more slowly — but it also make the software more amenable to performance tuning. The secret to fast software, in all but hard-real time contexts, is to write tunable software first and then tune it for sufficient speed.

I've seen three general approaches to writing fast software. The most serious of these is time budgeting, often used in hard-real time systems. As you decompose the design, you give each component a budget for resources — time and footprint. That component must not exceed its budget, although a mechanism for exchanging budgeted resources is allowed. Time budgeting focuses attention on hard performance times. It is essential for systems, such as heart pacemakers, in which late data is always bad data. This technique is inappropriate for other kinds of systems, such as the corporate information systems with which I usually work.

The second approach is the constant attention approach. Here, every programmer, all the time, does whatever she can to keep performance high. This is a common approach that is intuitively attractive — but it does not work very well. Changes that improve performance usually make the program harder to work with. This slows development. This would be a cost worth paying if the resulting software were quicker — but usually it is not. The performance improvements are spread all around the program; each improvement is made with a narrow perspective of the program's behavior, and often with a misunderstanding of how a compiler, runtime, and hardware behaves.

Ron JeffriesEven if you know exactly what is going on in your system, measure performance, don't speculate. You'll learn something, and nine times out of ten, it won't be that you were right!

The interesting thing about performance is that in most programs, most of their time is spent in a small fraction of the code. If I optimize all the code equally, I'll end up with 90 percent of my work wasted because it's optimizing code that isn't run much. The time spent making the program fast — the time lost because of lack of clarity — is all wasted time.

The third approach to performance improvement takes advantage of this 90-percent statistic. In this approach, I build my program in a well-factored manner without paying attention to performance until I begin a deliberate performance optimization exercise. During this performance optimization, I follow a specific process to tune the program.

I begin by running the program under a profiler that monitors the program and tells me where it is consuming time and space. This way I can find that small part of the program where the performance hot spots lie. I then focus on those performance hot spots using the same optimizations I would use in the constant-attention approach. But since I'm focusing my attention on a hot spot, I'm getting much more effect with less work. Even so, I remain cautious. As in refactoring, I make the changes in small steps. After each step I compile, test, and rerun the profiler. If I haven't improved performance, I back out the change. I continue the process of finding and removing hot spots until I get the performance that satisfies my users.

Having a well-factored program helps with this style of optimization in two ways. First, it gives me time to spend on performance tuning. With well-factored code, I can add functionality more quickly. This gives me more time to focus on performance. (Profiling ensures I spend that time on the right place). Second, with a well-factored program I have finer granularity for my performance analisys. My profiler leads me to smaller parts of the code, which are easier to tune. With clearer code, I have a better understanding of my options and of what kind of tuning will work.

I've found that refactoring helps me write fast software. It slows the software in the short term while I'm refactoring, but makes it easier to tune during optimization. I end up well ahead.

Wednesday, May 13, 2020

Refactoring, architecture and yagni

Martin Fowler, "Principles in Refactoring", in Refactoring: Improving the Design of Existing Code (2nd Edition), 62-63.Refactoring has profoundly changed how people think about software architecture. Early in my career, I was taught that software design and architecture was something to be worked on, and mostly completed, before anyone started writing code. Once the code was written, its architecture was fixed and could only decay due to carelessness.

Refactoring changes this perspective. It allows me to significantly alter the architecture of the software that's been running in production for years. Refactoring can improve the design of existing code, as this book's subtitle implies. But as I indicated earlier, changing legacy code is often challenging, especially when it lacks decent tests.

The real impact of refactoring on architecture is in how it can be used to form a well-designed code base that can respond gracefully to changing needs. The biggest issue with finishing architecture before coding is that such an approach assumes the requirements for the software can be understood early on. But experience shows that this is often, even usually, an unachievable goal. Repeatedly, I saw people only understand what they really needed from software once they'd had a chance to use it, and saw the impact it made to their work.

One way of dealing with future changes is to put flexibility mechanisms into the software. As I write some function, I can see that it has a general applicability. To handle the different circumstances that I antecipate it to be used in, I can see a dozen parameters I could add to that function. These parameters are flexibility mechanisms — and, like most mechanisms, they are not free lunch. Adding all those parameters complicates the function for the one case it's used right now. If I miss a parameter, all the parameterization I have added makes it harder for me to add more. I find I often get my flexibility mechanisms wrong — either because the changing needs didn't work out the way I expected or my mechanism design was faulty. Once I take all that into account, most of the time my flexibility mechanisms actually slow down my ability to react to change.

With refactoring, I can use a different strategy. Instead of speculating on what flexibility I will need in the future and what mechanisms will best enable that, I build software that solves only the currently understood needs, but I make this software excellently designed for those needs. As my understanding of the users' needs changes, I use refactoring to adapt the architecture to those new demands. I can happily include mechanisms that don't increase complexity (such as small, well-named functions) but any flexibility that complicates the software has to prove itself before I include it. If I don't have different values for a parameter from the callers, I don't add it to the parameter list. Should the time come that I need to add it, then Parameterize Function is an easy refactoring to apply. I often find it useful to estimate how hard it would be to use refactoring later to support an anticipated change. Only if I can see that it would be substantially harder to refactor later do I consider adding a flexibility mechanism now.

This approach to desing goes under various names: simple design, incremental design, or yagni (originally an acronym for "you aren't going to need it"). Yagni doesn't imply that architectural thinking disappears, although it is sometimes naively applied that way. I think of yagni as a different style of incorporating architecture and design into the development process — a style that isn't credible without the foundation of refactoring.

Adopting yagni doesn't mean I neglect all upfront architectural thinking. There are still cases where refactoring changes are difficult and some preparatory thinking can save time. But the balance has shifted a long way — I'm much more inclined to deal with issues later when I understand them better. All this has led to a growing discipline of evolutionary architecture where architects explore the patterns and practices that take advantage of our ability to iterate over architectural decisions.

Sunday, March 29, 2020

Principles in refactoring

Martin Fowler, "Principles in Refactoring", in Refactoring: Improving the Design of Existing Code (2nd Edition), 45-55.Refactoring (noun): a change made to the internal structure of software to make it easier to understand and cheaper to modify without changing its observable behavior.

Refactoring (verb): to restructure software by applying a series of refactorings without changing its observable behavior.

If someone says their code was broken for a couple of days while they are refactoring, you can be pretty sure they were not refactoring.

Why should we refactor?

When I talk about refactoring, people can easily see that it improves quality. Better internal design, readability, reducing bugs — all these improve quality. But doesn't the time I spend on refactoring reduce the speed of development?

Software with a good internal design allows me to easily find how and where I need to make changes to add a new feature. Good modularity allows me to only have to understand a small subset of the code base to make a change. If the code is clear, I'm less likely to introduce a bug, and if I do, the debbugging effort is much easier.

I refer to this effect as the Design Stamina Hypothesis: By putting our effort into a good internal design, we increase the stamina of the software effort, allowing us to go faster for longer.

Since it is very difficult to do a good design up front, refactoring becomes vital to achieving that virtuous path of rapid functionality.

When should we refactor?

Preparatory refactoring — making it easier to add a feature

The best time to refactor is just before I need to add a new feature to the code base. As I do this, I look at the existing code and, often, see that if it were structured a little differently, my work would be much easier. The same happens when fixing a bug.

Comprehension refactoring — making code easier to understand

Whenever I have to think to understand what the code is doing, I ask myself if I can refactor the code to make that understanding more immediately apparent. As Ward Cunningham puts it, by refactoring I move the understanding from my head into the code itself.

Litter-pickup refactoring

A variation of comprehension refactoring is when I understand what the code is doing, but realize that it's doing it badly. There's a bit of a tradeoff here. I don't want to spend a lot of time distracted from the task I'm currently doing, but I also don't want to leave the trash lying around and getting in the way of future changes. If it's easy to change, I'll do it right away. If it's a bit more effort to fix, I might make a note of it and fix it when I'm done with my immediate task.

Sometimes, of course, it's going to take a few hours to fix, and I have more urgent things to do. Even then, however, it's usually worthwhile to make it a little bit better. If I make it a little better each time I pass through the code, over time it will get fixed.

Planned and opportunistic refactoring

The examples above are all opportunistic. This is an important point that's frequently missed. Refactoring isn't an activity that's separated from programming. I don't put time on my plans to do refactoring; most refactoring happens while I'm doing other things.

It's also a common error to see refactoring as something people do to fix past mistakes or clean up ugly code. Certainly you have to refactor when you run into ugly code, but excellent code needs plenty of refactoring too. Whenever I write code, I'm making tradeoffs. The tradeoffs I made correctly for yesterday's feature set may no longer be the right ones for the new features I'm adding today. The advantage is that clean code is easier to refactor when I need to change those tradeoffs to reflect the new reality.

All this doesn't mean that planned refactoring is always wrong. But such planned refactoring episodes should be rare. Most refactoring effort should be the unremarkable, opportunistic kind.

When should I not refactor?

It may sound like I always recommend refactoring — but there are cases when it's not worthwhile.

If I run across code that is a mess, but I don't need to modify it, then I don't need to refactor it. Some ugly code that I can treat as an API may remain ugly. It's only when I need to understand how it works that refactoring gives me any benefit.

Another case is when it's easier to rewrite it than to refactor it. This is a tricky decision. Often, I can't tell how easy it is to refactor some code unless I spend some time trying and thus get a sense of how difficult it is. The decision to refactor or rewrite requires good judgment and experience, and I can't really boil it down into a piece of simple advice.

Friday, March 20, 2020

The rule of three

Martin Fowler, "Principles in Refactoring", in Refactoring: Improving the Design of Existing Code (2nd Edition), 50.Here's a guideline Don Roberts gave me: The first time you do something, you just do it. The second time you do something similar, you wince at the duplication, but you do the duplicate thing anyway. The third time you do something similar, you refactor.

Or for those who likes baseball: Three strikes, then you refactor.

Saturday, February 29, 2020

Advices on refactoring

Martin Fowler, "Refactoring: A First Example", in Refactoring: Improving the Design of Existing Code (2nd Edition), 33.Brevity is the soul of wit, but clarity is the soul of evolvable software.

Martin Fowler, "Refactoring: A First Example", in Refactoring: Improving the Design of Existing Code (2nd Edition), 34.I always have to strike a balance between all the refactorings I could do and adding new features. At the moment, most people underprioritize refactoring — but there still is a balance. My rule is a variation on the camping rule: Always leave the code base healthier than when you found it. It will never be perfect, but it should be better.

Martin Fowler, "Refactoring: A First Example", in Refactoring: Improving the Design of Existing Code (2nd Edition), 43.I'm talking about improving the code — but programmers love to argue about what good code looks like. [...] If we consider this to be a matter of aesthetics, where nothing is either good or bad but thinking makes it so, we lack any guide but personal taste. I believe, however, that we can go beyond taste and say that the true test of good code is how easy it is to change it.

Martin Fowler, "Refactoring: A First Example", in Refactoring: Improving the Design of Existing Code (2nd Edition), 44.The key to effective refactoring is recognizing that you go faster when you take tiny steps, the code is never broken, and you can compose those small steps into substantial changes.Remeber that — and the rest is silence.

Sunday, February 23, 2020

Putting evolutionary architecture into practice

Neal Ford, Rebecca Parsons & Patrick Kua, "Putting Evolutionary Architecture into Practice", in Building Evolutionary Architectures: Support Constant Change, 141-165.Organizational factors

Teams structured around domains rather than technical capabilities have several advantages when it comes to evolutionary architecture and exhibit some common characteristics.

- Cross-functional teams: the goal of a domain-centric team is to eliminate operational friction. In other words, the team has all roles needed to design, implement, and deploy their service, including traditionally separate roles like operations.

- Organized around business capabilities: many architectural styles of the past decade focused heavily on maximizing shared resources because of expense. Shared resource architecture has inherent problems around inadvertent interference between parts. Now that developers have the option of creating custom-made environments and functionality, it is easier for them to shift emphasis away from technical architectures and focus more on domain-centric ones to better match the common unit of change in most software projects.

- Product over project: product teams take ownership of quality metrics and pay more attention to defects. This perspective also helps provide long-term vision to the team.

- Dealing with external change: a common practice in microservices architectures is the use of consumer-driven contracts, which are atomic fitness functions. Maintaining integration protocol consistency shouldn't be done manually when it is easy to build fitness functions to handle this chore. Using engineering practice to police practices via fitness functions relieves lots of manual pain from developers but requires a certain level of maturity to be successful.

- Connections between team members: the motivation to create small teams revolves around the desire to cut down on communication links. Each team shouldn't have to know what other teams are doing, unless integration points exist between the teams. Even then, fitness functions should be used to ensure integrity of integration points.

Team coupling characteristics

Most architects don't think about how team structure affects the coupling characteristics of the architecture, but it has a huge impact.

Culture: well-functioning architects take on leadership roles, creating the technical culture and designing approaches for how developers build systems. Adjusting the behavior of the team often involves adjusting the process around the team, as people respond to what is asked of them to do.

Tell me how you measure me, and I will tell you how I will behave.

Dr. Eliyahu M. Goldratt (The Haystack Syndrome)- Culture of experimentation: successful evolution demands experimentation, but some companies fail to experiment because they are too busy delivering to plans. Successful experimentation is about running small activities on a regular basis to try out new ideas (both from a technical and product perspective) and to integrate successful experiments into existing systems.

CFO and budgeting

In an evolutionary architecture, architects strive to find the sweet spot between the proper quantum size and the corresponding costs. As we face an ecosystem that defies planning, many factors determine the best match between architecture and cost. This reflects our observation that the role of architect has expanded: architectural choices have more impact than ever. Rather than adhere to decades-old "best practices" guides about enterprise architecture, modern architects must understand the benefits of evolvable systems along with the inherent uncertainty that goes with them.

Where do you start?

While appropriate coupling and using modularity are some of the first steps you should take, sometimes there are other priorities. For example, if your data schema is hopelessly coupled, determining how DBAs can achieve modularity might be the first step.

- Low-hanging fruit: if an organization needs an early win to prove the approach, architects may choose the easiest problem that highlights the evolutionary architecture approach. Generally, this will be part of the system that is already decoupled to a large degree and hopefully not on the critical path to any dependencies. If teams use this effort as a proof-of-concept, developers should gather appropriate metrics for both before and after scenarios. Gathering concrete data is the best way to for developers to vet the approach: remember the adage that demonstration defeats discussion. This "easiest first" approach minimizes risk at the possible expense of value, unless a team is lucky enough to have easy and high value align.

- Highest-value: an alternative approach to "easiest first" is "highest value first" — find the most critical part of the system and build evolutionary behavior around it first.

- Testing: if developers find themselves in a code base with anemic or no testing, they may decide to add some critical tests before undertaking the more ambitious move to evolutionary architecture. Testing is a critical component to the incremental change aspect of evolutionary architecture, and fitness functions leverage tests aggressively. Thus, at least some level of testing enables these techniques, and a strong correlation exists between comprehensiveness of testing and ease of implementing an evolutionary architecture.

- Infrastructure: for companies that have a dysfunctional infrastructure, getting those problems solved may be a precursor to building an evolutionary architecture.

Ultimately, the advice parallels the annoying-but-accurate consultant's answer of It Depends! Only architects, developers, DBAs, DevOps, testing, security, and the other host of contributors can ultimately determine the best roadmap toward evolutionary architecture.

Why should a company decide to build an evolutionary architecture?

- Predictable versus evolvable: many companies value long-term planning for resources and other strategic matter; companies obviously value predictability. However, because of the dynamic equilibrium of the software development ecosystem, predictability has expired. Building evolvable architecture takes extra time and effort, but the reward comes when the company can react to substantive shifts in the marketplace without major rework. The highly volatile nature of the development world increasingly pushes all organizations toward incremental change.

- Scale: any coupling point in an architecture eventually prevents scale, and relying on coordination at the database eventually hits a wall. Inappropriate coupling represents the biggest challenge to evolution. Building a scalable system also tends to correspond to an evolvable one.

- Advanced business capabilities: many companies look with envy at Facebook, Netflix, and other cutting-edge technology companies because they have sophisticated features. Incremental change allows well-known practices such as hypotheses and data-driven development.

- Cycle time as a business metric: building continuous deployment takes a fair amount of engineering sophistication — why would a company go quite that far? Because cycle time has become a business differentiator in some markets. Some large conservative organizations view software as overhead and thus try to minimize cost. Innovative companies see software as a competitive advantage. Many companies have made cycle time a first-class business metric, mostly because they live in a highly competitive market. All markets eventually become competitive in this way.

- Isolating architectural characteristics at the quantum level: a common problem in highly coupled architectures is inadvertent overengineering. In a more coupled architecture, developers would have to build scalability, resiliency, and elasticity into every service, complicating the ones that don't need those capabilities. Architects are accustomed to choosing architectures against a spectrum of trade-offs. Building architectures with clearly defined quantum boundaries allows exact specification of the required architectural characteristics.

- Adaptation versus evolution: many organizations fall into the trap of gradually increasing technical debt and reluctance to make needed restructuring modifications, which in turns makes system and integration points increasingly brittle. Companies try to pave over the brittleness with connection tools like service buses, which alleviates some of the technical headaches but doesn't address deeper logical cohesion of business processes. Using a service bus is an example of adapting an existing system to use in another setting. But as we've highlighted previously, a side effect of adaptation is increased technical debt. When developers adapt something, they preserve the original behavior and layer new behavior alongside it. The more adaptation cycles a component endures, the more parallel behavior there is, increasing complexity, hopefully strategically. The use of feature toggles offers a good example of the benefits of adaptation. Often, developers use toggles when trying several alternate alternatives via hypotheses-driven dvelopment, testing their users to see what resonates best. In this case, the technical debt imposed by toggles is purposeful and desirable. Of course, the engineering best practices around these types of toggles is to remove them as soon as the decision is resolved. Alternatively, evolving implies fundamental change. Building an evolvable architecture entails changing the architecture in situ, protected from breakages via fitness functions. The end result is a system that continues to evolve in useful ways without an increasing legacy of outdated solutions lurking within.

Why would a company choose not to build an evolutionary architecture?

We don't believe that evolutionary architecture is the cure for all ailments! Companies have several legitimate reasons to pass on these ideas.

- Can't evolve a ball of mud: one of the key "-ilities" architects neglect is feasibility — should the team undertake this project? If an architecture is a hopelessly coupled Big Ball of Mud, making it possible to evolve it cleanly will take an enormous amount of work — likely more than rewriting it from scratch. Companies loath throwing anything away that has perceived value, but often rework is more costly than rewrite. How can companies tell if they're in this situation? The first step to converting an existing architecture into an evolvable one is modularity. Thus, a developer's first task requires finding whatever modularity exists in the current system and restructuring the architecture around those discoveries. Once the architecture becomes less entagled, it becomes easier for architects to see underlying structures and make reasonable determinations about the effort needed for restructuring.

- Other architectural characteristics dominate: evolvability is only one of many characteristics architects must weigh when choosing a particular architecture style. No architecture can fully support conflicting core goals. For example, building high performance and high scale into the same architecture is difficult. In some cases, other factors may outweigh evolutionary change.

- Sacrificial architecture: building a sacrificial architecture implies that architects aren't going to try to evolve it but rather replace it at the appropriate time with something more permanent. Cloud offerings make this an attractive option for companies experimenting with the viability of a new market or offering.

- Planning on closing the business soon: evolutionary architecture helps businesses adapt to changing ecosystem forces. If a company doesn't plan to be in business in a year, there's no reason to build evolvability into their architecture. Some companies are in this position; they just don't realize it yet.

Convincing others

Architects and developers struggle to make nontechnical managers and coworkes understand the benefits of something like evolutionary architecture. This is especially true of parts of the organization most disrupted by some of the necessary changes. For example, developers who lecture oprations group about doing their job incorrectly will generally find resistance. Rather than try to convince reticent parts of the organization, demonstrate how these ideas improve their practices.

This business case

Business people are often wary of ambitious IT projects, which sound like expensive replumbing exercises. However, many businesses find that many desirable capabilities have their basis in more evolutionary architectures.

- Moving fast without breaking things: most large enterprises complain about the pace of change within the organization. One side effect of building an evolutionary architecture manifests as better engineering efficiency. All the practices we call incremental change improve automation and efficiency. Defining top-level enterprise architecture concerns as fitness functions both unifies a disparate set of concerns under one umbrella and forces developers to think in terms of objective outcomes. Business people fear breaking change. If developers build an architecture that allows incremental change with better confidence than older architectures, both business and engineering win.

- Less risk: with improved engineering practices comes decreased risk. Once developers have confidence that their practices will allow them to make changes in the architecture without breaking things, companies can increase their release cadence.

Building evolutionary architectures

Our ideas about building evolutionary architectures build upon and rely on many existing things: testing, metrics, deployment pipelines, and a host of other supporting infrastructure and innovation. We're creating a new perspective to unify previously diversified concepts using fitness functions. We want architects to start thinking of architectural characteristics as evaluable things rather than ad hoc aspirations, allowing them to build more resilient architectures. The software development ecosystem is going to continue to churn out new ideas from unexpected places. Organizations who can react and thrive in that environment will have a serious advantage.

Sunday, February 16, 2020

Evolutionary architecture pitfalls and antipatterns

Neal Ford, Rebecca Parsons & Patrick Kua, "Evolutionary Architecture Pitfalls and Antipatterns", in Building Evolutionary Architectures: Support Constant Change, 12-139.We identify two kinds of bad engineering practices that manifest in software projects — pitfalls and antipatterns. Many developers use the word antipattern as a jargon for "bad", but the real meaning is more subtle. A software antipattern has two parts. First, an antipattern is a practice that initally looks like a good ideia, but turns out to be a mistake. Second, better alternatives exist for most antipatterns. Architects notice many antipatterns only in hindsight, so they are hard to avoid. A pitfall looks superficially like a good idea but immediately reveals itself to be a bad path.

Technical architecture

Antipattern: vendor king

Some large enterprises buy Enterprise Resource Planning (ERP) software to handle common business tasks like accouting, inventory management, and other common chores. However, many organizations become overambitious with this category of software, leading to the vendor king antipattern, an architecture built entirely around a vendor product that pathologically couples the organization to a tool.

To escape this antipattern, treat all software as just another integration point, even if it initially has broad responsibilities. By assuming integration at the outset, developers can more easily replace behavior that isn't useful with other integration points, dethroning the king.

Pitfall: leaky abstractions

Increased tech stack complexity has made the abstraction distraction problem worse recently. Not only does the ecosystem change, but the constituent parts become more complex and interwined over time as well. Our mechanism for protecting evolutionary change — fitness functions — can protect the fragile join points or architecture. Architects define invariants at key integration points as fitness functions, which run as part of a deployment pipeline, ensuring abstractions don't start to leak in undesirable ways.

Antipattern: last 10% trap

Another kind of reusability trap exists at the other end of the abstraction spectrum, with package software, platforms, and frameworks.

In 4GLs, 80% of what the client wants is quick and easy to build. These environments are modeled as rapid application development tools, with drag-and-drop support for UIs and other niceties. However, the next 10% of what the client wants is, while possible, extemely difficult — because that functionality wasn't built into the tool, framework, or language. So clever developers figure out a way to hack tools to make things work: adding a script to execute where static things are expected, chaining methods, and other hacks. The hack only gets you from 80% to 90%. Ultimately the tool can't solve the problem completely — a phrase we coined as the Last 10% Trap — leaving every project a disappointment. While 4GLs make it easy to build simple things fast, they don't scale to meet the demands of the real world.

Antipattern: code reuse abuse

Ironically, the more effort developers put into making code reusable the harder it is to use. Making code reusable involves adding additional options and decisions points to accomodate the different uses. The more developers add hooks to enable reusability the more they harm the basic usability of the code.

Microservices eschew code reuse, adopting the philosophy of prefer duplication to coupling: reuse implies coupling, and microservices architectures are extremely decoupled. However, the goal in microservices isn't to embrace duplication but rather to isolate entities within domains. Services that share a common class are no longer independent. In a microservices architecture,

CheckoutandShippingwould each have their own internal representation ofCustomer. If they need to collaborate on customer-related information, they send the pertinent information to each other. Architects don't try to reconcile and consolidate the disparate versions ofCustomerin their architecture. The benefits of reuse are illusory and the coupling it introduces comes with its disadvantages. Thus, while architects understand the downsides of duplication, the offset that localized damage to the architectural damage too much coupling introduces.We're not suggesting teams to avoid building reusable assets, but rather evaluate them continually to ensure they still deliver value. When coupling points impede evolution or other important architectural characteristics, break the coupling by forking or duplication.

All too often architects make a decision that is the correct decision at the time but becomes a bad decision over time because of changing conditions like dynamic equilibrium. Architects must continually evaluate the fitness of the "-ilities" of the architecture to ensure they still add value and haven't become antipatterns.

Pitfall: resume-driven development

Don't build architecture for the sake of architecture — you are trying to solve a problem. Always understand the problem domain before choosing an architecture rather than the other way around.

Incremental change

Antipattern: inappropriate governance

In modern environments, it is inappropriate governance to homogenize on a single technology stack. This leads to the inadvertent overcomplication problem, where governance decisions add useless multipliers to the effort required to implement a solution. When developers build monolith architectures, governance choices affect everyone. Thus, the architect must look at the requirements of every project and make a choice that will serve the most complex case. A small project may have simple needs yet must take on the full complexity for consistency.

With microservices, because none of the services are coupled via technical or data architecture, different teams can choose the right level of complexity and sophistication required to implement their service. This partitioning tends to work best when the team wholly owns their service, including the operational aspects.

Building services in different technology stacks is one way to achieve technical architecture decoupling. Many companies try to avoid this approach because they fear it hurts the ability to move employees across projects. However, Chad Fowler, an architect at Wunderlist, took the opposite approach: he insisted that teams use different technology stacks to avoid inadvertent coupling. His philosophy is that accidental coupling is a bigger problem than developer portability.

From a practical governance standpoint in large organizations, we find the Goldilocks Governance model works well: pick three technology stacks for standardization — simple, intermediate, and complex — and allow individual service requirements to drive stack requirements. This gives teams the flexibility to choose a suitable technology stack while still providing the company some benefits of standards.

Pitfall: lack of speed to release

While the extreme version of Continuous Delivery, continuous deployment, isn't required for an evolutionary architecture, a strong correlation exists between the ability to release software and the ability to evolve that software design.

If companies build an engineering culture around continuous deployment, expecting that all changes will make their way to production only if they pass the gauntlet laid out by the deployment pipeline, developers become accustomed to constant change. On the other hand, if releases are a formal process that require a lot of specialized work, the chances of being able to leverage evolutionary architecture diminishes.

Cycle time is therefore a critical metric in evolutionary architecture projects — faster cycle time implies a faster ability to evolve.

Business concerns

Most of the time, business people aren't nefarious characters trying to make things difficult for developers, but rather have priorities that drive inappropriate decisions from an architectural standpoint, which inadvertently constrain future options.

Pitfall: product customization

Salespeople want options to sell. The caricature of sales people has them selling any requested feature before determining if their product actually contains that feature. Thus, sales people want infinitely customizable software to sell. However, that capability comes at a cost along with a spectrum of implementation techniques, like unique build for each customer, permanent feature toggles, and product-driven customization.

Customization also impedes evolvability, but this shouldn't discourage companies from building customizable software, but rather to realistically asses the associated costs.

Antipattern: reporting

Reporting is a good example of inadvertent coupling in monolithic architectures. Architects and DBAs want to use the same database schema for both system of record and reporting, but encounter problems because a design to support both is optimized for neither. A common pitfall developers and report designers conspire to create in layered architecture illustrates the tension between concerns. Architects build layered architecture to cut down on incidental coupling, creating layers of isolation and separation of concerns. However, reporting doesn't need separate layers to support its function, just data. Additionally, routing requests through layers adds latency. Thus, many organizations with good layered architectures allow report designers to couple reports directly to database schemas, destroying the ability to make changes to the schema without wrecking reports. This is a good example of conflicting business goals subverting the work of architects and making evolutionary change extremely difficult. While no one set out to make the system hard to evolve, it was the cumulative effect of decisions.

Many microservices architectures solve the reporting problem by separating behavior, where the isolation of services benefits separation but not consolidation. Architects commonly build these architectures using event streaming or message queues to populate domain "system of record" databases, each embedded within the architectural quantum of the service, using eventual consistency rather than transactional behavior. A set of reporting services also listens to the event stream, populating a denormalized reporting database optimized for reporting. Using eventual consistency frees architects from coordination — a form of coupling from an architectural standpoint — allowing different abstractions for different uses of the application.

Pitfall: planning horizons

The Sunk Cost Fallacy describes decisions affected by emotional investment. Put simply, the more someone invests time or effort into something, the harder it becomes to abandon it. In software, this is seen in the form of the irrational artifact attachment — the more time and effort you invest in planning or a document, the more likely you will protect what's contained in the plan or document even in the face of evidence that it is inaccurate or outdated.

Beware of long planning cycles that force architects into irreversible decisions and find ways to keep options open. Architects should avoid following technologies that require a significant upfront investment before software is actually built (e.g., large licenses and support contracts) before they have validated through end-user feedback that the technology actually fits the problem they are trying to solve.

Thursday, January 30, 2020

Guidelines for building evolutionary architectures

Neal Ford, Rebecca Parsons & Patrick Kua, "Building Evolvable Architectures", in Building Evolutionary Architectures: Support Constant Change, 107-119.[...] As much as we like to talk about architecture in pristine, idealized settings, the real world often exhibits a contrary mess of technical debt, conflicting priorities, and limited budgets. Architecture in large companies is built like the human brain — lower-level systems still handle critical plumbing details but have some old baggage. Companies hate to decommission something that works, leading to escalating integration architecture challenges.

Retrofitting evolvability into an existing architecture is challenging — if developers never built easy change into the architecture, it is unlikely to appear spontaneously. No architect, no matter how talented, can transform a Big Ball of Mud into a modern microservices architecture without immense effort. Fortunately, projects can receive benefits without changing their entire architecture by building some flexibility points into the existing one.

Remove needless variability

One of the goals of Continuous Delivery is stability — building on known good parts. A common manifestation of this goal is the modern DevOps perspective on building immutable infrastructure.

While immutability may sound like the opposite of evolvability, quite the opposite is true. Software systems comprise thousands of moving parts, all interlocking in tight dependencies. Unfortunately, developers still struggle with unantecipated side effects of changes to one of those parts. By locking down the possibility of unantecipated change, we control more of the factors that make systems fragile. Developers strive to replace variables in code with constants to reduce vectors of change. DevOps introduced this concept to operations, making it more declarative.

Immutable infrastructure follows our advice to remove needless variables. Building software systems that evolve means controlling as many unknown factors as possible.

Make decisions reversible

Many DevOps practices exist to allow reversible decisions — decisions that need to be undone. For example, blue/green deployments, where operations have two identical (probably virtual) ecosystems — blue and green ones — common in DevOps.

Feature toggles are another common way developers make decisions reversible. By deploying changes underneath feature toggles, developers can release them to a small subset of users (called canary releasing) to vet the change. [...] Make sure you remove the outdated ones!

[...] Service routing — routing to a particular instance of a service based on request context — is another common method to canary release in microservices ecosystems.

Make as many decisions as possible reversible (withou over-engineering).

Prefer evolvable over predictable

Unknown unknowns are the nemesis of software systems. Many projects start with a list of known unknowns: things developers know they must learn about the domain and technology. However, projects also fall victim to unknown unknowns: things no one knew were going to crop up yet have appeared unexpectedly. This is why all Big Design Up Front software efforts suffer — architects cannot design for unkown unknowns.

While no architecture can survive the unknown, we know that dynamic equilibrium renders predictability useless in software. Instead, we prefer to build evolvability into software: if projects can easily incorporate changes, architects don't need a crystal ball.

Build anticorruption layers

Projects often need to couple themselves to libraries that provide incidental plumbing: message queues, search engines, and so on. The abstraction distraction antipattern describes the scenario where a project "wires" itself too much to an external library, either commercial or open source. Once it becomes time for developers to upgrade or switch the library, much of the application code utilizing the library has backed-in assumptions based on the previous library abstractions. Domain-driven design includes a safeguard against this phenomenon called an anticorruption layer.

Agile architects prize the last responsible moment principle when making decisions, which is used to counter the common hazard in projects of buying complexity too early.

[...] Most developers treat crufty old code as the only form of technical debt, but projects can inadvertently buy technical debt as well via premature complexity.

In the last responsible moment answer questions such as "Do I have to make this decision now?", "Is there a way to safely defer this decision without slowing any work?", and "What can I put in place now that will suffice but I can easily change later if needed?"

[...] Building an anticorruption layer encourages the architect to think about the semantics of what they need from the library, not the syntax of the particular API. But this is not an excuse to abstract all the things! Some development communities love preemptive layers of abstraction to a distracting degree but understanding suffers when you must call a

Factoryto get aproxyto a remote interface to aThing. Fortunately, most modern languages and IDEs allow developers to be just in time when extracting interfaces. If a project finds themselves bound to an out-of-date library in need of change, the IDE can extract interface on behalf of the developer, making a Just In Time (JIT) anticorruption layer.Controlling the coupling points in an application, especially to external resources, is one of the key responsibilities of an architect. Try to find the pragmatic time to add dependencies. As an architect, remember dependencies provide benefits but also impose constraints. Make sure the benefits outweigh the cost in updates, dependency management, and so on.

Architects must understand both benefits and tradeoffs and build engineering practices accordingly.

Using anticorruption layers encourages evolvability. While architects can't predict the future, we can at least lower the cost of change so that it doesn't impact us so negatively.

Build sacrificial architectures

[...] At an architectural level, developers struggle to anticipate radically changing requirements and characteristics. One way to learn enough to choose a correct architecture is build a proof of concept. Martin Fowler defines a sacrifical architecture as an architecture designed to be thrown away if the concept proves successful.

Many companies build a sacrificial architecture to achieve a minimum viable product to prove a market exists. While this is a good strategy, the team must eventually allocate time and resources to build a more robust architecture [...].

One other aspect of technical debt impacts many initially successful projects, elucidated again by Fred Brooks, when he refers to the second system syndrome — the tendency of small, elegant, and successful systems to evolve into giant, feature-laden monstrosities due to inflated expectations. Business people hate to throw away functioning code, so architecture tends toward always adding, never removing, or decomissioning.

Technical debt works effectively as a metaphor because it resonates with project experience, and represents faults in design, regardless of the driving forces behind them. Technical debt aggravates inappropriate coupling on projects — poor design frequently manifests as pathological coupling and other antipatterns that make restructuring code difficult. As developers restructure architecture, their first step should be to remove the historical design compromises that manifest as technical debt.

Mitigate external change

Most projects rely on a dizzying array of third-party components, applied via build tools. Developers like dependencies because they provide benefits, but many developers ignore the fact that they come with a cost as well. When relying on code from a third party, developers must create their own safeguards against unexpected occurrences: breaking changes, unannounced removal, and so on. Managing these external parts of projects is critical to creating evolutionary architecture.

We recommend that developers take a more proactive approach to dependency management. A good start on dependency management models external dependencies using a pull model. For example, set up an internal version-control repository to act as a third-party component store, and treat changes from the outside world as pull requests to that repository. If a beneficial change occurs, allow it into the ecosystem. However, if a core dependency disappears suddenly, reject that pull request as a destabilizing force.

Using a Continuous Delivery mindset, the third-party component repository utilizes its own deployment pipeline. When an update occurs, the deployment pipeline incorporates the change, then performs a build and smoke test on the affected applications. If successful, the change is allowed into the ecosystem. Thus, third-party dependencies use the same engineering practices and mechanisms of internal development, usefully blurring the lines across this often unimportant distinction between in-house written code and dependencies from third parties — at the end of the day, it's all code in a project.

Updating libraries versus frameworks

Because frameworks are a fundamental part of applications, teams must be aggressive about pursuing updates. Libraries generally form less brittle coupling points than frameworks do, allowing teams to be more casual about upgrades. One informal governance model treats framework updates as push updates and library updates as pull updates. When a fundamental framework (one whose afferent/efferent coupling numbers are above a certain threshold) updates, teams should apply the update as soon as the new version is stable and the team can allocate time for the change. Even though it will take time and effort, the time spent early is a fraction of the cost if the team perpetually procrastinates on the update.

Because most libraries provide utilitarian functionality, teams can afford to update them only when new desired functionality appears, using more of an "update when needed" model.

Prefer continuous delivery to snapshots

Continuous Delivery suggested a more nuanced way to think about dependencies, repeated here. Currently, developers only have static dependencies, linked via version numbers captured as metadata in a build file somewhere. However, this isn't sufficient for modern projects, which need a mechanism to indicate speculative updating. Thus, as the book suggests, developers should introduce two new designations for external dependencies: fluid and guarded. Fluid dependencies try to automatically update themselves to the next version, using mechanisms like deployment pipelines. For example, say that

orderfluidly relies on version 1.2 offramework. Whenframeworkupdates itself to version 1.3,ordertries to incorporate that change via its deployment pipeline, which is set up to rebuild the project anytime any part of it changes. If the deployment pipeline runs to completion, the fluid dependency between the components is updated. However, if something prevents successful completion — failed test, broken diamond dependency, or some other problem — the dependency is updated to a guarded reliance onframework1.2, which means the developer should try to determine and fix the problem, restoring the fluid dependency. If the component is truly incompatible, developers create a permanent static reference to the old version, eschewing future automatic updates.None of the popular build tools support this level of functionality yet — developer must build this intelligence atop existing build tools. However, this model of dependencies works extremely well in evolutionary architectures, where cycle time is a critical foundational value, being proportional to many other key metrics.

Version services internally

[...] Developers use two common patterns to version endpoints, version numbering or internal resolution. For version numbering, developers create a new endpoint name, often including the version number, when a breaking change occurs. [...] The alternative is internal resolution, where callers never change the endpoint — instead, developers build logic into the endpoint to determine the context of the caller, returning the correct version. The advantage of retaining the name forever is less coupling to specific version numbers in calling applications.

In either case, severely limit the number of supported versions. The more versions, the more testing and other engineering burdens. Strive to support only two versions at a time, and only temporarily.

Sunday, January 26, 2020

Migrating architectures

Neal Ford, Rebecca Parsons & Patrick Kua, "Building Evolvable Architectures", in Building Evolutionary Architectures: Support Constant Change, 100-103.Many companies end up migrating from one architectural style to another. One of the most common paths of migration is from monolith to some kind of service-based architecture, for reasons of the general domain-centric shift in architectural thinking [...]. Many architects are tempted by the highly evolutionary microservices architecture as a target for migration, but this is often quite difficult, primarily because of existing coupling.

When architects think of migrating architecture, they typically think of the coupling characteristics of classes and components, but ignore many other dimensions affected by evolution, such as data. Transactional coupling is as real as coupling between classes, and just as insidious to eliminate when restructuring architecture. These extra-class coupling points become a huge burden when trying to break the existing modules into too-small pieces.

Many senior developers build the same types of applications year after year, and become bored with the monotony. Most developers would rather write a framework than use a framework to create something useful: Meta-work is more interesting than work. Work is boring, mundane, and repetitive, whereas building new stuff is exciting.

Architects aren't immune to the "meta-work is more interesting than work" syndrome, which manifests in choosing inappropriate but buzz-worthy architectural styles like microservices.

Don't build an architecture just because it will be fun meta-work.

Migration steps

Many architects find themselves faced with the challenge of migrating an outdated monolithic application to a more modern service-based approach. Experienced architects realize that a host of coupling points exist in applications, and one of the first tasks when untangling a code base is understanding how things are joined. When decomposing a monolith, the architect must take coupling and cohesion into account to find the appropriate balance. For example, one of the most stringent constraints of the microservices architectural style is the insistance that the database reside inside the service's bounded context. When decomposing a monolith, even if it is possible to break the classes into small enough pieces, breaking the transactional contexts into similar pieces may present an unsurmountable hurdle.

Architects must understand why they want to perform this migration, and it must be a better reason than "it's the current trend". Splitting the architecture into domains, along with better team structure and operational isolation, allows for easier incremental change, one of the building blocks of evolutionary architecture, because the focus of work matches the physical work artifacts.

When decomposing a monolithic architecture, finding the correct service granularity is key. Creating large services alleviates problems like transactional contexts and orchestration, but does little to break the monolith into smaller pieces. Too-fine-grained components lead to too much orchestration, communication overhead, and interdependency between components.

Evolving module interactions

Migrating shared modules (including components) is another common challenge faced by developers. [...] Sometimes, the library may be split cleanly, preserving the separate functionality each module needs. [...] However, it's more likely the shared library won't split that easily. In that case, developers can extract the module into a shared library (such as a JAR, DLL, gem, or some other component mechanism) and use it from both locations.

Sharing is a form of coupling, which is highly discouraged in architectures like microservices. An alternative to sharing a library is replication.

In a distributed environment, developers may achieve the same kind of sharing using messaging or service invocation.

When developers have identified the correct service partitioning, the next step is separation of the business layers from the UI. Even in microservices architectures, the UIs often resolve back to a monolith — after all, developers must show a unified UI at some point. Thus, developers commonly separate the UIs early in the migration, creating a mapping proxy layer between UI components and the back-end services they call. Separating the UI also creates an anticorruption layer, insulating UI changes from architecture changes.

The next step is service discovery, allowing services to find and call one another. Eventually, the architecture will consist of services that must coordinate. By building the discovery mechanism early, developers can slowly migrate parts of the system that are ready to change. Developers often implement service discovery as a simple proxy layer: each component calls the proxy, which in turn maps to the specific implementation.

Dave Wheeler and Kevlin HenneyAll problems in computer science can be solved by another level of indirection, except of course for the problem of too many indirections.

Of course, the more levels of indirection developers add, the more confusing navigating the services becomes.

When migrating an application from a monolithic application architecture to a more service-based one, the architect must pay close attention to how modules are connected in the existing application. Naive partitioning introduces serious performance problems. The connection points in application become integration architecture connections, with the attendant latency, availability, and other concerns. Rather than tackle the entire migration at once, a more pragmatic approach is to gradually decompose the monolithic into services, looking at factors like transaction boundaries, structural coupling, and other inherent characteristics to create several restructuring iterations. At first, break the monolith into a few large "portions of the application" chunks, fix up the integration points, and rinse and repeat. Gradual migration is preferred in the microservices world.

Next, developers choose and detach the chosen service from the monolith, fixing any calling points. Fitness functions play a critical role here — developers should build fitness functions to make sure the newly introduced integration points don't change, and add consumer-driven contracts.

Refactoring and performance

Martin Fowler, "Refactoring: A First Example", in Refactoring: Improving the Design of Existing Code (2nd Edition), 20.[...] Most programmers, even experienced ones, are poor judges of how code actually performs. Many of our intuitions are broken by clever compilers, modern caching techniques, and the like. The performance of software usually depends on just a few parts of the code, and changes anywhere else don't make an appreciable difference.

But "mostly" isn't the same as "alwaysly". Sometimes a refactoring will have a significant performance implication. Even then, I usually go ahead and do it, because it's much easier to tune the performance of well-factored code. If I introduce a significant performance issue during refactoring, I spend time on performance tuning afterwards. It may be that this leads to reversing some of the refactoring I did earlier — but most of the time, due to the refactoring, I can apply a more effective performance-tuning enhancement instead. I end up with code that's both clearer and faster.

So, my overall advice on performance with refactoring is: Most of the time you should ignore it. If your refactoring introduces performance slow-downs, finish refactoring first and do performance tuning afterwards.

Saturday, January 25, 2020

The first step in refactoring

Martin Fowler, "Refactoring: A First Example", in Refactoring: Improving the Design of Existing Code (2nd Edition), 5.Bfore you start refactoring, make sure you have a solid suite of tests. These tests must be self-checking.

The purpose of refactoring

Martin Fowler, "Refactoring: A First Example", in Refactoring: Improving the Design of Existing Code (2nd Edition), 4-5.Given that the program works, isn't any statement about its structure merely an aesthetic judgment, a dislike of "ugly" code? After all, the compiler doesn't care whether the code is ugly or clean. But when I change the system, there is a human involved, and humans do care. A poorly designed system is hard to change — because it is difficult to figure out what to change and how these changes will interact with the existing code to get the behavior I want. And if it is hard to figure out what to change, there is a good chance that I will make mistakes and introduce bugs.

Thus, if I'm faced with modifying a program with hundreds of lines of code, I'd rather it be structured into a set of functions and other program elements that allow me to understand more easily what the program is doing. If the program lacks structure, it's usually easier for me to add structure to the program first, and then make the change I need.

Let me stress that it's these changes that drive the need to perform refactoring. If the code works and doesn't ever need to change, it's perfectly fine to leave it alone. It would be nice to improve it, but unless someone needs to understand it, it isn't causing any real harm. Yet as soon as someone does need to understand how that code works, and struggles to follow it, then you have to do something about it.

Martin Fowler, "Refactoring: A First Example", in Refactoring: Improving the Design of Existing Code (2nd Edition), 10.Any fool can write code that a computer can understand. Good programmers write code that humans can understand.

Saturday, January 18, 2020

Refactoring versus restructuring

Neal Ford, Rebecca Parsons & Patrick Kua, "Building Evolvable Architectures", in Building Evolutionary Architectures: Support Constant Change, 99.Developers sometimes co-opt terms that sound cool and make them into broader synonyms, as is the case for refactoring. As defined by Martin Fowler, refactoring is the process of restructuring existing computer code without changing its external behavior. For many developers, refactoring has become synonymous with change, but there are key differences.